In 2014 I was trying to replicate the University of Texas – Austin groundbreaking paper on artificial muscles using nylon fishing line. For that I needed a few tools, a drill, a heat gun, some nylon wires, etc. While I was at amazon, I took a look into duffle bags. My father lended (as in gave) me his when I came to Lisbon and I wore it out until is barely holding together. For weeks after that order my amazon page suggested me shovels, different kinds of drillers, saws, accompanied with duffle bags which gave out a vibe that I tortured and burry people for a living

In 2014 I was trying to replicate the University of Texas – Austin groundbreaking paper on artificial muscles using nylon fishing line. For that I needed a few tools, a drill, a heat gun, some nylon wires, etc. While I was at amazon, I took a look into duffle bags. My father lended (as in gave) me his when I came to Lisbon and I wore it out until is barely holding together. For weeks after that order my amazon page suggested me shovels, different kinds of drillers, saws, accompanied with duffle bags which gave out a vibe that I tortured and burry people for a living

The cause of this are recommendation (or suggestion) engines. Their are little pieces of software the suggest what music to play next, what else you may want to see on a news feed or you may want to order. Their are also the little piece of software that enable people to radicalize them self on Facebook articles or YouTube videos, and enable large groups of people to create echo chambers, shielded from a healthy diet of information.

Generally recomendation engines can be as large or small but in general the way they work is quite the same. Imagine that each point are songs you listen, or stuff you’ve bought:

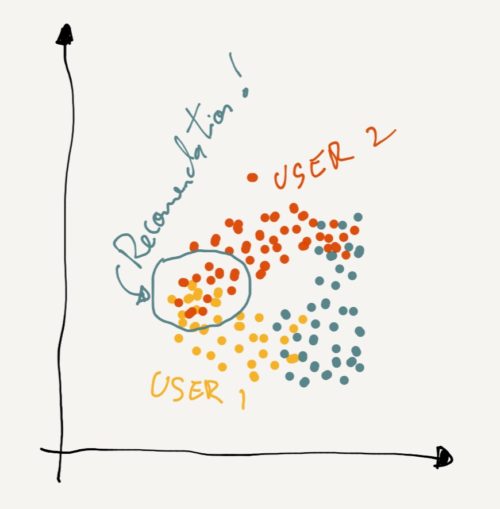

Now what recommendation engines do is to find people similar with you and whatever they all in common like is what is recommended to you, a bit like this:

Here you can see here that the main problem of these types of systems is that they will inevitably converge into the most common denominator. That’s why when you receive bad recommendations on your amazon page. If I bought a driller, amazon is going to show you what other people bought with that driller, maybe some nails, maybe a hammer.

The same behavior happens when you are watching stuff on YouTube. For instance, you start watching some Kendrick Lamar song. Now a lot of people watch that song but a lot of them also listen to Drake. Since you listen to that, a lot of people also listened to this song by Cloe x Warrior. By the way next is this vlog from this unknown guy. Is not that I like all those people along the way, but is what most people watched.

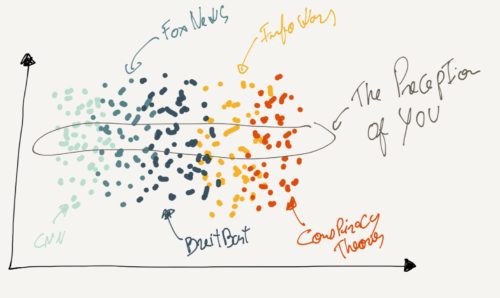

This may seem quite innocuous but the problem is that this enables you to go through deep content holes. You start by watching some Fox News Clip, next it recommends a Breitbart clip, by the way here’s a Infowars clip, and since you are here, get some guy in a basement talking about QAnon.

In general there’s nothing wrong about watching some crazy Alex Jones rants. The problem is that the engine can’t diferenciate between one night and your identity. I remember once a friend helped me assembling a cabinet in my house and after during dinner we watched some videos a YouTube Channel that he liked. It wasn’t my cup of tea, but it took weeks for that channel to disappear from my recommendations. The engine tends to capture a preception of you that is mistaken. And if you are talking about politics and all your consumption is coming from YouTube, your perception of reality will become biased by what ever the recommendations will be. The real problem here is that these systems can not capture serendipity.

It’s really hard to explain serendipity. Imagine that you are at a party and the DJ plays all the current hits. But suddenly he plays this music that just feels and sounds great but you never heard. That’s serendipity. It’s the pleasure of the unplanned discovery. Going down the rabbit hole one night on conspiracy theory videos doesn’t mean that I’m all about that. It just a fortuitous trip that you took.

I’m under the impression that the problem here is that real time recommendations are impossible withou context or the notion of time. A system that would play the right songs for sunny afternoon would require the system to recognize concepts beyond what ever other people are listening. That’s why, even after years no one could create an AI system that could replace a DJ at a wedding or at a radio station. There’s something of high order that no recommendation system right now can capture.

Unfortunately accidents require rough edges, not smooth surfaces. Until then, we’ll have to tolerate bad suggestions.